Incoming video chat. I swipe to answer. “Hey, Steve!” Noel Maghathe — a local artist who I enlisted to help view the show remotely — stands in the main gallery of The Carnegie in Covington, the site of FotoFocus’s new exhibition, AutoUpdate: Photography in the Electronic Age. AutoUpdate is an ambitious undertaking, featuring 44 regional artists. It accompanies a full-day symposium, held Oct. 5 at the gallery, exploring the impact of digital technologies on lens-based media.

Across the first floor, works communicate in a secret language. Heat-map chromatics in Gary Mesa-Gaido’s “String Theory Series #1-9a” mirror a rotating 3D grid in D Brand’s “Speech and Peanut Butter.” Two spoons behind its angled screen gesture toward Julie Jones’s saturated backyard photographs. Installations by Joshua Kessler and Joshua Penrose relate textile to images — for Kessler, ornamental pattern resolves into a grid of yearbook photos, while Penrose uses stretched linen as a kind of mesh filter to transform rear-projected video. Lori Kella’s “Rising to the Surface” depicts what I imagine it would look like to send fish encased in an ice block through an airport baggage scanner.

Like many of the works in the show, the strangeness of these images takes time to unfurl.

“Some scholars have argued that the latest crisis in the world of photography comes from ‘massification,’ referring to the sheer number of photographic images that are now made and disseminated,” Geoffrey Batchen says via email.

An academic who specializes in the history of photography, Batchen wrote the essay “Phantasm: Digital Imaging and the Death of Photography” in the 1994 monograph Metamorphoses: Photography in the Electronic Age, from which The Carnegie exhibit and symposium takes its name. (The publication was a special edition of photography magazine Aperture.) In it, he described the technological and epistemological crises facing photography in the 1990s. I asked if this has changed.

He explains how “massification” alters how we relate to images and, consequently, to the world. “These, rather than questions of photography’s capacity for truth or falsehood, are the terms of contemporary debate,” he says.

In a nearby hallway at The Carnegie, ¡Katie B. Funk!’s “profile/profile” covers the surface of an interior window with printed snapshots. According to Exhibition Director Matt Distel, these photos were similarly installed in the artist’s street-level apartment. Anyone who turned to look at the building triggered a surveillance camera. Eight of the resulting photos are neatly framed a few feet away.

Mass surveillance is among the topics slated for the symposium, alongside “deepfakes” and post-truth, the fate of documentary filmmaking and others. The event’s keynote speaker is celebrated artist, author and MacArthur Fellowship recipient Trevor Paglen, whose multidisciplinary practice establishes a kind of poetics of mass surveillance. Other panelists and speakers include artist and filmmaker Lynn Hershman Leeson, artist Nancy Burson and microbiologist Dr. Elisabeth Bik.

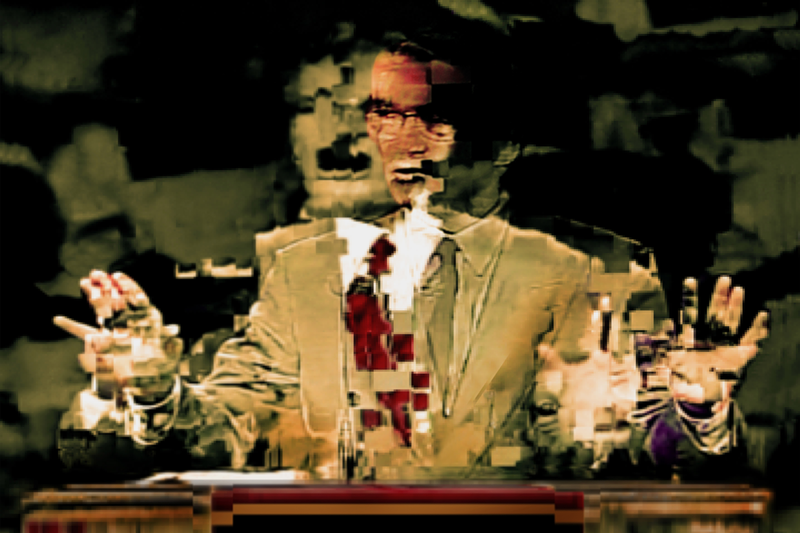

“Where’d you go?” Maghathe asks. “Is there something wrong with the feed?” At first, I didn’t realize they were holding their phone up to Tina Gutierrez’s “Seascape,” which resembles the glitchy blocks of an over-compressed jpeg file. “I’m joking, it’s only the art.”

Glitches show something of the data-essence of digital media, the poetry of which is explored elsewhere, too, in videos by Anna Christine Sands and images by Juan Sí Gonzalez. These works aestheticize the truism that underlying all our digital representation are machines talking to each other. This made me wonder: How would artificial intelligence trained to “see” images review AutoUpdate?

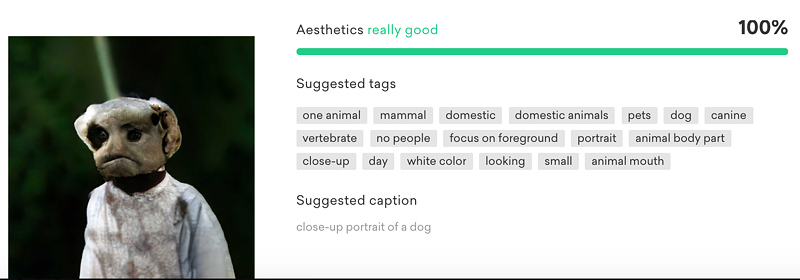

I uploaded 46 images from the press packet to an experimental service called EyeEm Vision, which uses an AI machine learning to quantify and rank images’ aesthetic value. While the average aesthetic ranking was 75 percent (“really good”), exactly one piece of art received a perfect 100 percent: Emily Zeller’s inscrutable nightmare “Bow Tie, 46.28%,” depicting what seems to be a deformed fungal dog. EyeEm’s caption reads: “close-up portrait of a dog” alongside generated tag descriptors “domestic,” “canine,” “vertebrate,” “animal body part,” and others.

It’s interesting that EyeEm chose to lavish praise on this particular image. To create it, Zeller employed a Generative Adversarial Network (GAN). “The work is largely a collaboration between human and machine,” Zeller explains on her website. “I control these ratios, editing the ‘genes’ of my pieces, and then allow the GAN to generate what it believes is the resultant image.”

Maybe EyeEm recognized something of its own essence in “Bow Tie, 46.28%”? It’s worth pointing out that such “machine vision” processes aren’t magic. They’re designed to simulate how a human would rate or describe an image, usually trained on millions of human-tagged images.

AI researcher Kate Crawford recently developed such a network for FotoFocus symposium keynote Paglen’s ImageNet Roulette, which uses AI to “classify” uploaded selfies, attempting to guess “what kind” of person is shown. The project’s goal is to demystify AI and expose its frequently problematic human biases.

Maybe it’s not surprising that human flaws are replicated in AI. Even so, I wanted to see how ImageNet Roulette would categorize “Bow Tie, 46.28%.” The result: “parrot,” which it defined as “a copycat who does not understand the words or acts being imitated.” I assume the AI does not grasp the irony here.

AIs like EyeEm, ImageNet Roulette and the one employed by Zeller function like lenses. Through them, we can see the world — and ourselves — in different ways. As Batchen pointed out, our present crises are relational. The human-image relationship reflexively points to the human-technology relationship.

In front of Britni Bicknaver’s “After Hours (The Carnegie Gallery, Covington, Kentucky),” I caught Maghathe’s reflection in the glossy video screen. Holding their phone at eye level, its edges disappeared inside their reflection. It’s strange, seeing myself in someone else’s body, a phantom superimposed on shaky horror-movie footage. I had a similar feeling looking at Valerie Sullivan Fuchs’ video, Floating City. Here, skyscrapers loom above their reflection in an uncanny symmetry. The absence of ground flattens the pictorial space, tilting the structures in an abyss of sky. I found it difficult to place myself in relationship to the strange geometry unfolding across the gallery wall.

AutoUpdate set out to explore technology’s impact on lens-based practice. But it uncovered something deeper, a mirror turning our gaze back onto itself.

The exhibition itself functions like an algorithm, asking us: How has technology redefined what it means to be human?

AutoUpdate: Photography in the Electronic Age is on view at The Carnegie (1028 Scott Blvd., Covington) through Nov. 16 with a reception 5-8 p.m. on Oct. 4, followed by the all-day symposium on Oct. 5. More info: fotofocus.org.